How should I convince my company to adopt A/B testing?

This interview is part of Kameleoon's Expert FAQs series, where we interview leading experts in data-driven CX optimization and experimentation. Ben Labay is the Managing Director at experimentation agency Speero by CXL, speaker on all things research and experimentation, artist, and conservation science consultant. Ben has spent 10+ years in academia and conservation science and 6+ years working in the marketing and digital industries doing experimentation program work.

It feels like digital A/B testing has still not reached “mainstream” despite being around for nearly two decades. What factors are holding companies back from adopting this practice?

It's against human nature and group dynamics for people to fail at scale and be 'wrong'. If you are paid to be a website owner, you have to have an opinion and a strong one. Lawyers are paid to red-line, etc. This dynamic sets us up for silos, HIPPOs, hierarchical business structures, and a general lack of democratization in decision-making. There's a lot of evolutionary behavior in this.

But lessons from 3M, Marriot, Microsoft, Apple, and Amazon tell us that it pays to have an innovation culture, and it's more fun. You get to work with 'yes, and’ types of people and teams where there's psychological safety, locality in decision making, and customer-centric focus.

Often testing tools are expensive shiny objects attractive to the 'solutionists'. The main issue for our lack of success in making A/B testing mainstream is that these tools need to be purchased alongside PROCESS CHANGES. You can't stick an experimentation tool into an organization of HIPPOs and silos and expect great things.

Process and direction are essential to get companies to adopt experimentation. That is a systems approach to learning. If we have suitable systems in place, we can execute well.

Can you explain why “program management” is vital to company buy-in?

I spent six months with Native Deodorant before having a test winner. I almost got fired three times. There were long periods of tinkering away before finally getting a substantial win. I kept at it and then hit it huge with an up-sell/cross-sell widget that’s still on the site today. It added more than $250k per month in revenue to the business. I’ve seen the same thing happen in many experimentation programs.

The problem is I don’t believe there are silver bullets or best practices that apply universally. So we need a systems approach to our work to deliver results to the business consistently.

I have a mental model for this: Knowledge = experience + sensitivity. Experience is the best practice or ‘what worked before,’ but sensitivity is the statistics that tell you what works for one data population.

That said, I’m a firm believer in certain categories of ‘what to test,’ such as clarity, and increasing the ‘choose your own journey’ techniques such as wizards and questionnaires, etc. But I don’t think focusing on these tactics elevates the industry or helps with buy-in. I think better processes are what lifts all boats.

Which program management metrics are the best indicators of success?

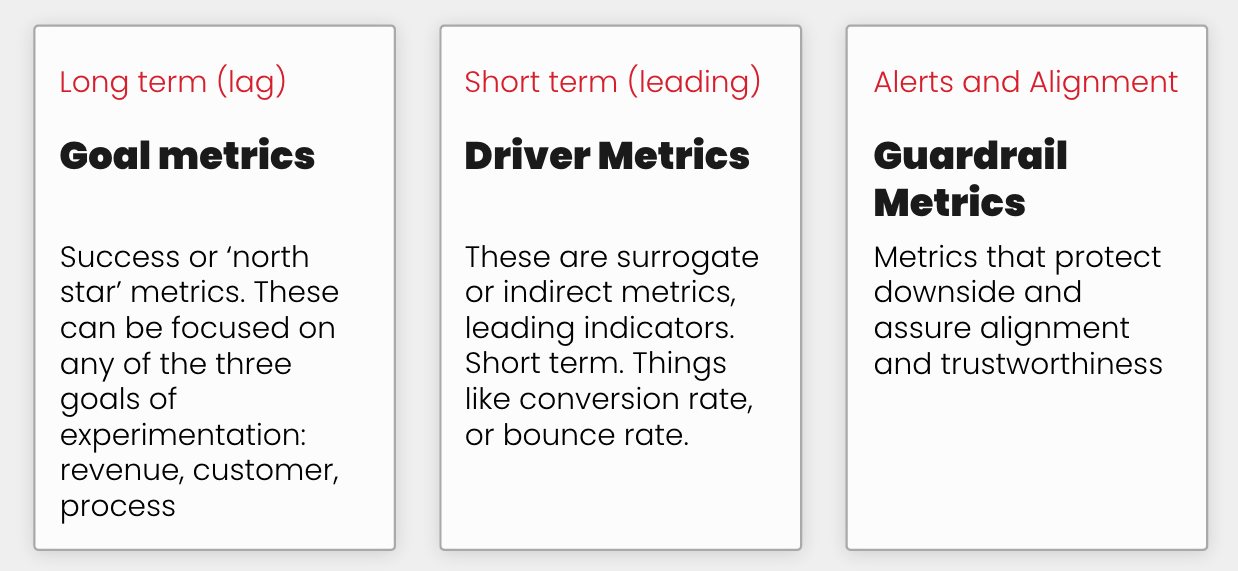

First, it makes sense to clarify a metric taxonomy; here’s mine:

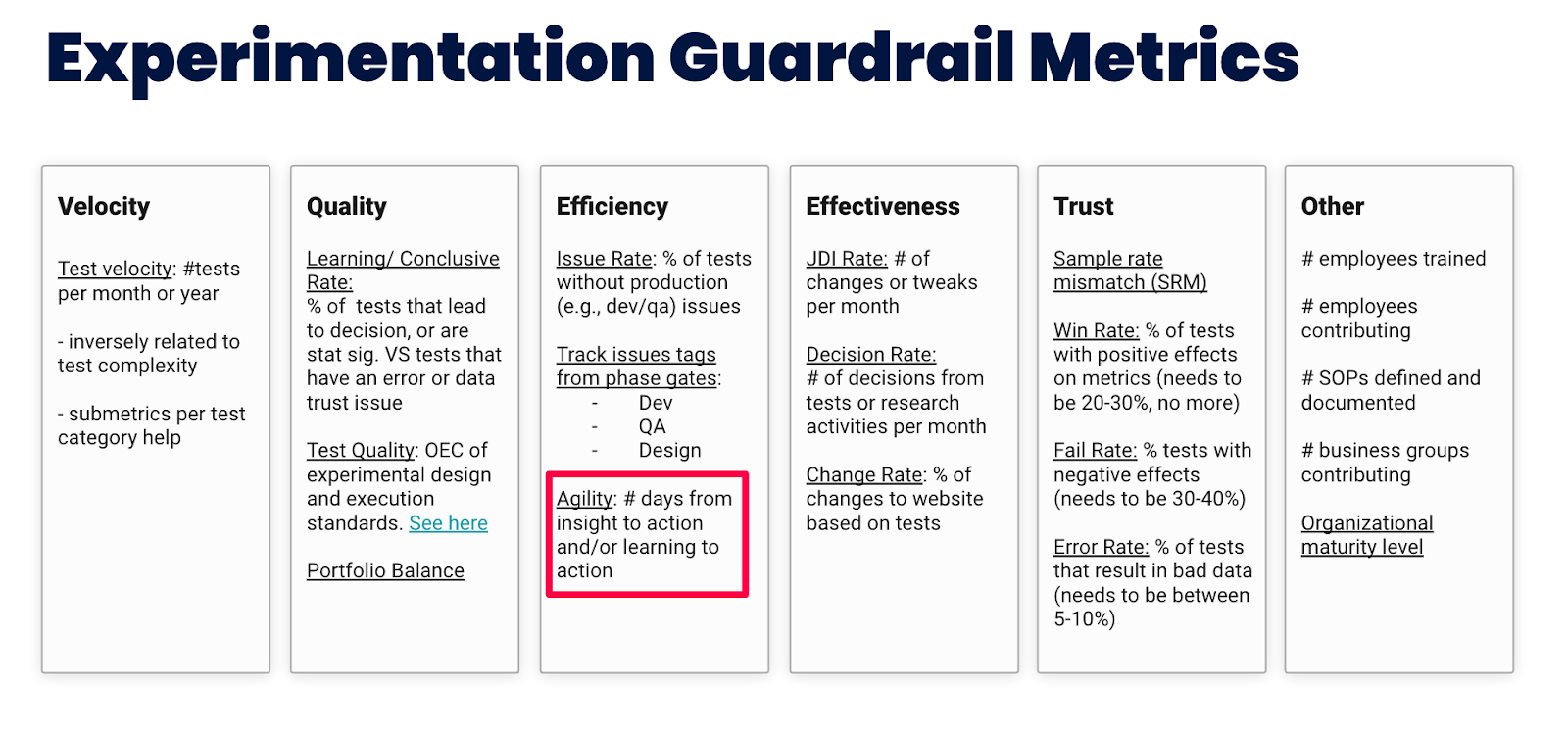

We’re talking about the ‘guardrail metrics’ specifically. Here are some crucial guardrail metrics to measure, indicating how well your testing process is working. If you improve the testing machine, you can improve the outcomes of your testing work.

“Program Agility” (highlighted below) is my favorite metric for assessing the success of an experimentation program. It speaks to the time it takes to go from one part of the flywheel to the other. From assessment through planning to action. From idea to decision.

The key is to make better decisions faster, so we’re in flow, working on the front lines without blockers. So ‘agility,’ defined by the time between phases, is a core metric. It’s important to note that it is an output metric, not an outcome. So it’s squarely pointed to the system, not what the system is trying to change (e.g., conversion rate.)

I’ve also recorded a 10-minute video overviewing key CRO program metrics, which you can watch here.

You talk a lot about test program “artifacts.” Can you explain what these are and why they are important?

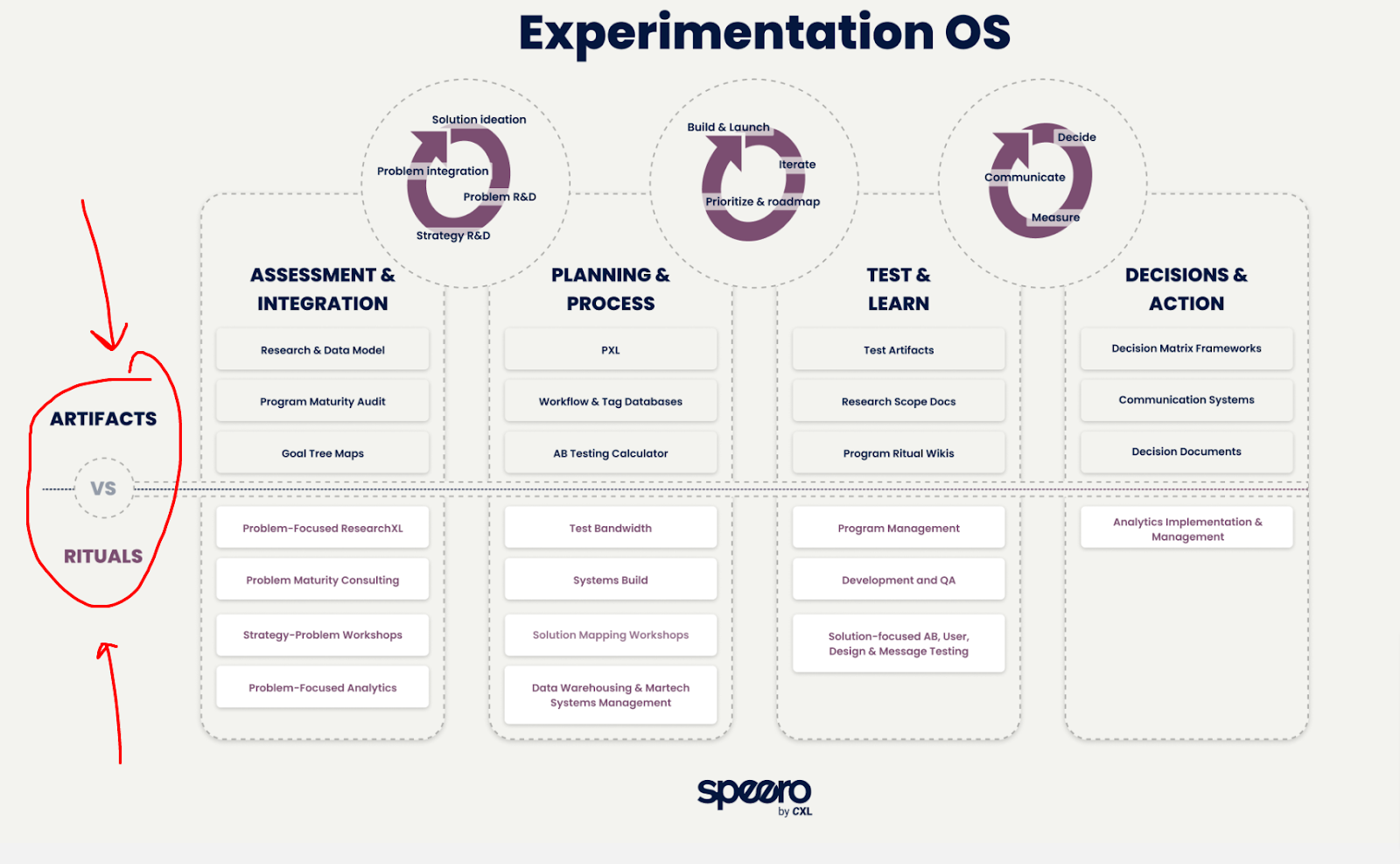

Artifacts are usually tangible tools such as documents, figures, frameworks, calculators, and even models. This is in contrast to other program management elements such as activities, rituals, or ceremonies that require a sequence of actions.

I originally got this concept from reading about the CRO program at Farfetch. In their example, a program management artifact is a test report, and a ritual is a peer review session to gather feedback on that artifact.

I’ve found this distinction super helpful and use it to think about our agency’s entire product and marketing from the perspective of this breakdown. For example, I want to produce artifacts and give them to the industry. Then I want to develop rituals as services to charge and make money for our agency.

Here’s a simplification of it:

This is the best illustration of what we do as an agency in one graph. Note that the process and framework are key, not the specific content in the boxes. That content can change as different artifacts are needed or established in various businesses.

In practical terms, what steps can individuals take to change their business culture to support and run experimentation?

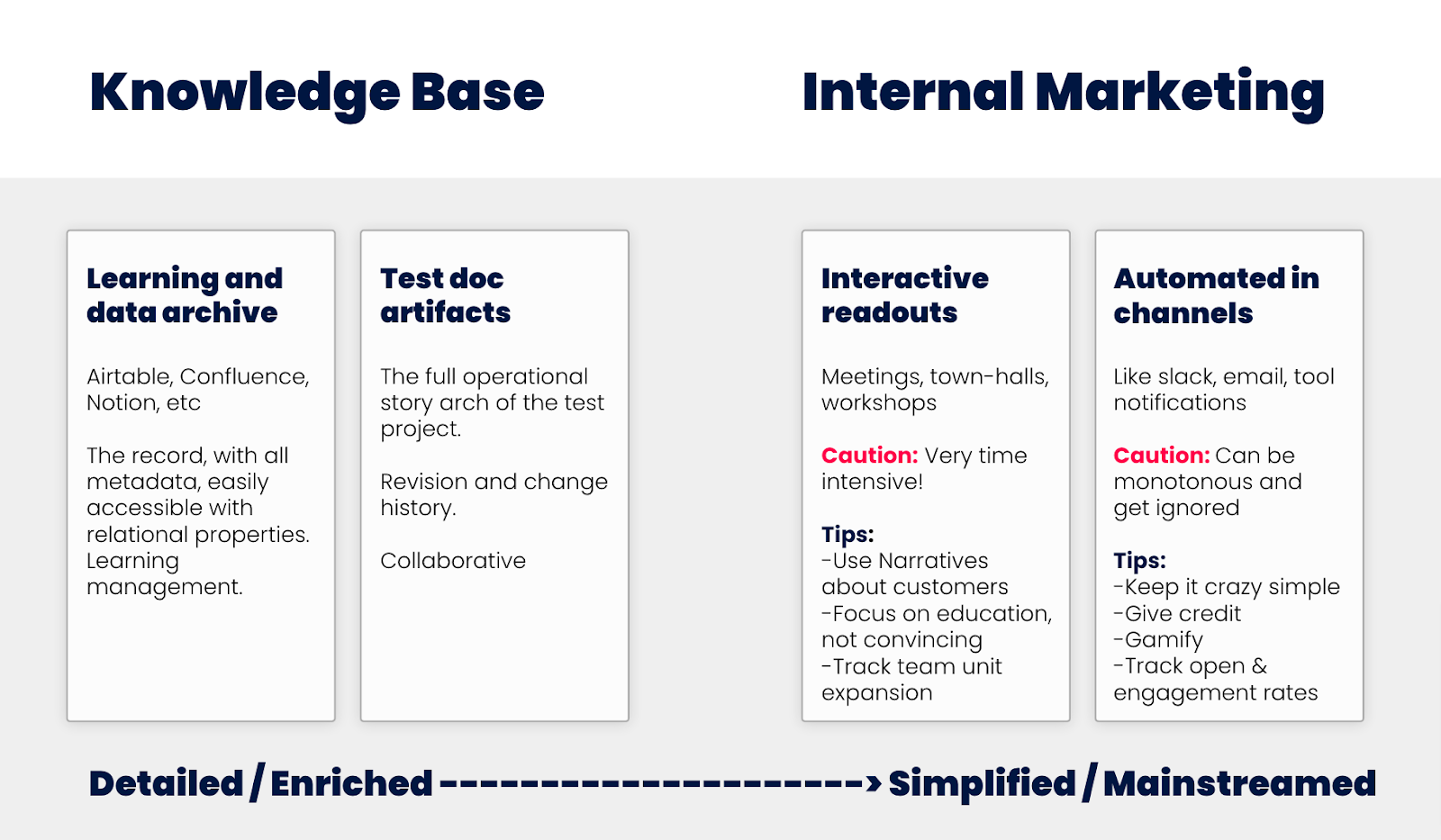

This is hard, but experimentation on these elements themselves makes sense. It’s important to try to get the right info to the right person in the right format at the right time. Here’s a rough framework for ‘communication’ where you can see more artifacts on the left and rituals on the right.

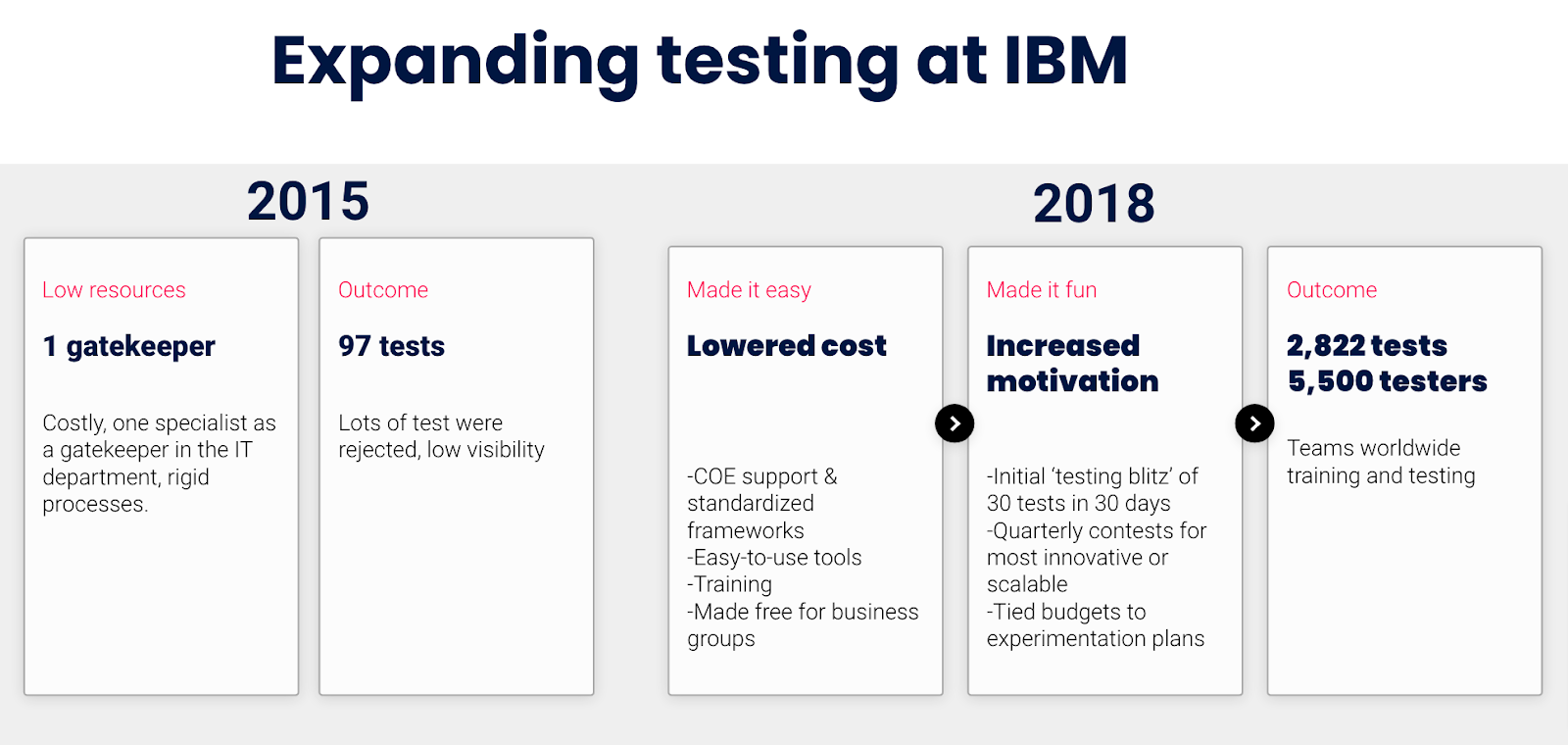

I’d also point to the case study of IBM and how they shifted their culture and scaled experimentation rapidly using cultural rituals.

The key elements IBM used to change its company culture were

- Support from leadership (rare to this extent.)

- Invested in systems for ease of use.

- Invested in intelligent incentivization (related to support from leadership.)

- Gamification and communication/enrolment via contests and blitzes.

We’re intrigued by your side business, InkedAnimal, where you create amazing prints using animal specimens. Could you tell us more about the process involved in creating your art and how you got started in this?

Ha, yes. I’ve always loved art and used to do a ton of it in college. But there was a point I chose science over art as a career path.

Printing animals came from a friendship and work situation where my art partner and I were often involved in collecting fish (we used fish and environmental data to model system health for state and federal governments and non-profits). Printing fish is an old art form called Gyotaku, and we just extended it to animals.

It’s how I got into web work. I built our web gallery to showcase the artwork way back in 2008, and as they say, the rest is history.