Frequentist vs Bayesian A/B Testing: which method is right for you?

A/B testing is at the heart of data-driven decision-making. Behind every test result is a statistical method.

There are two primary statistical approaches: frequentist and Bayesian.

While both methods are valid, they each reflect fundamentally different philosophies and produce different statistical outputs.

As a result, many experimenters find themselves stuck wondering which approach they should use.

The answer isn’t just a matter of math.

It comes down to speed, accuracy, business impact, and how well your team can interpret and act on the results.

To help you determine which statistical methodology you should choose for your experiments, this comprehensive guide:

- Explores the key differences between frequentism vs Bayesianism

- Gives the pros and cons of each method

- Highlights the growing role of hybrid testing

- Describes how different product team members, including marketers, managers, and engineers, can each benefit from the right statistical strategy

- Gives practical tips to translate statistical outputs into confident decisions

Whether you're launching your first experiment or running dozens across channels, understanding the frequentist and Bayesian statistical frameworks is an essential step to build trust in your data, and accelerate impact with every test you run.

The basics: probability, variance, and sample size

Before diving into the statistical concepts behind the frequentist and Bayesian methods, it can be helpful to first understand how these two statistical models work. So imagine you’re flipping a coin.

In 10 flips, you get heads 7 times. The odds are low, but certainly not impossible.

In 100 flips, you get heads 42 times.

In 1,000 flips, you get heads 496 times.

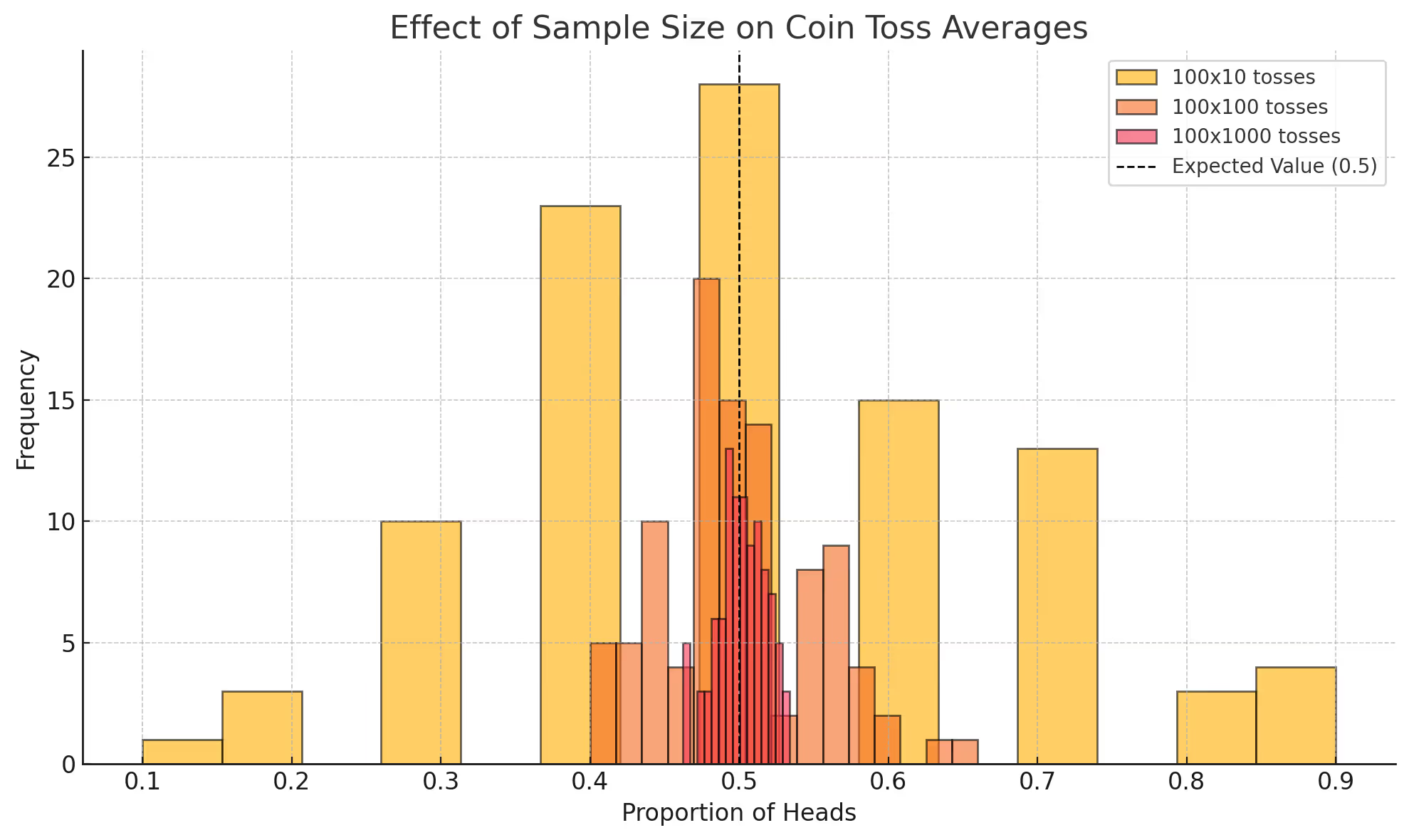

As your number of flips increases, your result gets closer and closer to an even score. Here's a simple way to visualize this outcome:

This chart shows that as the number of coin tosses increases, the results start to stabilize.

With 10 flips (yellow), the results are scattered. With 100 (orange), they begin to cluster, but with 1,000 flips, they tighten around 0.5 (50%), the expected value.

This chart illustrates a key concept of how variance shrinks with larger samples. As the sample size (in this example coin tosses) increases, the results become more predictably accurate.

The more data you have, the more reliable your estimate becomes.

Understanding how uncertainty behaves in small versus large samples is exactly what both frequentist and Bayesian methods try to manage, interpret, and act on.

In A/B testing, the reliability of your results depends on your sample size and the variance. The larger the sample size, the less variance you have in the data.

But in A/B testing, you’re not just measuring a flip of heads or tails. Instead, you’re measuring things like click-through rates, revenue, and conversions.

What are the frequentist and Bayesian methods?

Frequentist statistics

The frequentist method comes from classical statistics.

The approach starts with what’s known as the null hypothesis, which, in an A/B test, assumes there’s no difference between the control and the variant(s).

From there, it asks the important question: if there’s truly no difference between variants, how likely are the results?

That answer is a p-value, which tells you how probable your results are if there really is no difference. You also calculate confidence intervals, which reflect how much the data could vary.

This method, which has been around for over 70 years, is the most widely taught and used.

In A/B testing, it’s favored for its rigor and simplicity.

However, the downside of this method is it has no way to incorporate external knowledge or previous learnings.

Bayesian statistics

Bayesian statistics, also known as Bayesian probability, addresses this downside.

Rather than asking how likely it is that there’s no difference between the variations, Bayesian statistics flips the question.

It asks, given what’s been seen before (the prior data), how likely is it that the variant will outperform the control?

This method uses what’s called a prior distribution, which is what was previously believed, and updates it with current data to produce what’s called a posterior probability.

This posterior probability is an updated estimate that reflects the degree of belief in the outcome.

For example, in an A/B test, Bayesian statistics might conclude that version B has a 12% chance of beating the control.

While Bayesian testing isn’t currently the default approach, it’s becoming more common in modern experimentation platforms, like Kameleoon, because the method is flexible and gives clear business insights.

However, the Bayesian method isn’t a cure-all for your statistical woes. If the prior belief is wrong, it may need more data than the frequentist approach to correct that bias.

When and why to use each method

As a result, both frequentism and Bayesianism have their own strengths, limitations, and ideal use cases.

Since neither approach is infallible, which should you use?

Here’s a list of pros and cons to help you decide the best use-case for your situation:

Pros of frequentist A/B testing

1. Standard, easy to explain, and widely understood

The frequentist method is the industry standard because it’s simple to implement, widely taught, and typically understood by teams across many disciplines from the medical community to experimenters. Most stakeholders are already familiar with key frequentist terms like “p-value” and “statistical significance,” making communication about test results clearer across teams. As a result, the frequentist method is widely supported and built into nearly every A/B testing tool.

In fact, according to respected experimenter Lucia van den Brink:

[It is] easy to explain if a test is either a win, loss (learning) or non-significant. Also people naturally understand it when you say that a test has ‘a significant result’ since frequentist is the standard across medical research practices.

—Lucia van den Brink

Founder, Increase Conversion Rate

2. Reliable, trustworthy data

The frequentist method works best when you calculate your sample size ahead of time. As long as you don’t “peek” or stop your test too early, before sample size requirements have been met, you’ll get highly reliable data by calculating results using the frequentist approach.

Cons of frequentist A/B testing

1. Requires strict test setup and timing

While the frequentist method is known for providing trustworthy data, that reliability depends on proper setup and discipline during the test. To ensure valid results, you typically need to define your sample size and test duration in advance. Otherwise, if you “peek” at results mid-test and stop the test early, you risk invalidating your findings. That said, with frequentist testing, you can move from a basic A/B test to an A/B/C/D, or even a multivariate test, using frequentist techniques like ANOVA, with only minimal impact on runtime. And if you want to check results during the test without compromising statistical validity, sequential testing is available as either a frequentist or Bayesian-compatible solution.

2. Can give statistical significance without practical insight

Frequentist testing gives you a p-value, which tells you the probability of your result in a world where it was only caused by chance. But this statistical significance doesn’t always translate into meaningful business impact. For example, a result might reach 95% confidence but show only a tiny lift that’s too small to justify implementation costs. Without pairing statistical findings with business context, there’s a risk of acting on results that are technically valid but strategically weak.

Pros of Bayesian A/B testing

Many teams are now gravitating toward Bayesian approaches, not because they’re statistically superior, but because the way results are presented tends to be more intuitive for business leaders to understand and act on.

1. Interpret results more naturally

Bayesian testing expresses results in a way that aligns with how people naturally think. Although frequentist terms are widely used, they can be counter-intuitive. For example, instead of saying “if the results were random, there would be a 5% chance this happens,” as in frequentist methods, Bayesian analysis tells you directly how likely it is that a variant will outperform the control. This output helps marketers, product managers, and stakeholders make faster, more confident decisions. This clarity makes Bayesian a compelling choice for product managers, marketers, and anyone looking to act on results without needing to navigate layers of statistical nuance.

As experimentation expert Ellie Hughes explains:

The direction of confidence in Bayesian analysis is more intuitive to explain to business leaders. With frequentist methods, we’re not expressing a probability, we’re showing how confident we are in the method used to obtain the result. For a 95% confidence interval, we’re essentially saying there’s a 5% chance we’re wrong, which can feel backward or confusing. The Bayesian model flips that. It tells you directly how confident you can be that a change is real and meaningful. Most modern A/B testing tools are now investing in proprietary statistical engines that provide this kind of insight, not because they’re mathematically purer, but because they help teams make faster, better decisions by communicating results in a clearer way.

—Ellie Hughes

Head of Consulting, Eclipse Group

2. Incorporates past learnings

Bayesian testing allows you to include prior knowledge, like past test outcomes or historical data, into your analysis. This data can speed up time-to-decision and reduce the sample size needed, especially when similar tests have been run before.

By layering past insights into new experiments, Bayesian methods enable smarter, more context-aware testing. Because Bayesian methods focus on the probability of real improvement, not just statistical significance, this approach reduces the risk of false positives, so you can more confidently act on test results. The Bayesian approach works particularly well when tracking performance across continuous metrics like average order value, time on site, or engagement score, where nuance matters.

Cons of Bayesian A/B testing

1. Creates struggle without historical data

However, Bayesian methods are only as good as the prior assumptions they rely on. If your prior is well-founded and reflective of your current business reality, Bayesian analysis can give you faster, more accurate results. But if that prior is off, or based on outdated or biased assumptions, it can give you a false sense of confidence. You can think of it like retouching a headshot: it might look polished on the surface, but it doesn’t always reflect the real picture.

As the stats expert Ishan Goel, puts it:

If an idea has worked on 10 websites before, Bayesian thinking assumes it’s more likely to work on the 11th. But if it failed on those 10, a surprising success might raise red flags about your data. This ability to factor in prior expectations can lead to faster, more accurate decisions. But only if your assumptions are right. If they’re wrong, Bayesian methods may need even more data than frequentist ones to correct the course.

—Ishan Goel

Founder, Thinking Bell

2. Introduces subjectivity through priors

Bayesian methods rely on prior assumptions which can introduce subjectivity into the analysis. As a result, deciding what prior to use, how strong it should be, and whether it accurately reflects reality, isn’t always straightforward. If team members or stakeholders disagree on what the prior should be, it can create confusion, inconsistency, or even biased outcomes. Without clarity about how priors are defined and what the Bayesian output actually represents, it is easy to mistake belief for certainty.

In contrast, frequentist methods avoid this issue by analyzing the data independently of any prior belief.

When should you use each method?

Clearly, each method has its own strengths and depends on factors like sample size, speed, and business goals.

If you want a simple way to decide which method to use, here’s what Juliana J., Associate Director, Data & Digital Experience at Monks says:

When you look to ask, ‘how likely is this result if there’s really no difference?’ go with Frequentist. It keeps things objective and avoids assumptions. But if you already have meaningful insights and want to update your understanding as new data comes in, go with Bayesian; it’s less about data size and more about context. The real skill is knowing both methods and choosing the right one for the situation.

—Juliana Jackson

Digital Marketing & Data Leader

Combining both models in accurate A/B testing

However, it doesn’t always have to be so black and white.

In fact, Ben Labay, CEO of Speero says his best approach involves blending both methodologies since they each offer benefits.

I lean on frequentist power analysis to carefully design tests, ensuring the right sample size and runtime for robust results. But post-experiment, I find Bayesian calculators helpful for delivering intuitive 'chance to beat control' metrics that better resonate with stakeholders, sidestepping the statistical-semantic land-mines of p-values and getting more into the heart and practice of 'applied science' rather than science.

—Ben Labay

CEO, Speero

Ben is not alone. Many modern experimentation programs now use frequentist methods for statistical control and Bayesian frameworks for business interpretation.

For example, you might run a test using sequential frequentist logic, but overlay Bayesian reporting to help decision-makers understand risk and reward.

Another common hybrid approach is to use frequentist testing during initial product launches where regulatory or statistical certainty is critical, then switch to Bayesian methods for ongoing personalization or optimization. This approach allows you to balance precision with agility, using each method where it fits best in the experimentation lifecycle.

Whatever you decide, either method can be valid, as long as you're ensuring your test data is trustworthy.

Statistical methods based on your organizational needs

Different teams often favor different testing methods based on their goals, workflows, and communication needs.

Marketing teams

Marketing teams typically need quick, easy-to-interpret results that tie directly to business KPIs.

In this context, Bayesian methods tend to work best because statements like “there’s a 15% chance version B is better” resonate well with result-driven stakeholders.

A Bayesian approach makes it easier to quantify and drive business decisions. Product managers

Often juggle multiple initiatives and need to stay agile. As a result, they may also lean toward Bayesian testing.

This approach supports faster, iterative decision-making because it allows flexibility for mid-test check-ins without invalidating results.

Engineering and data science teams

Engineering teams may prefer the structure and statistical rigor of frequentist methods.

When applied with proper controls, such as sequential adjustments offered by platforms like Kameleoon, frequentist testing provides strong reproducibility and reliability, making it ideal for scaled experimentation.

UX and design teams

Design teams may find a blend of both methodologies most helpful. When closely integrated with product and marketing, these teams may benefit from the speed and clarity of Bayesian outputs.

But if the focus is on validating long-term changes across diverse user groups, the precision and guardrails of frequentist testing can offer more confidence in the results.

The best approach aligns with your organizational needs No matter what approach you choose, it’s important to align the statistical methodology you use with your team's goals.

By doing so, you can clearly translate the statistical outputs into actionable business insights.

Final considerations

There is no single winner in the "frequentist vs Bayesian" debate. No method replaces critical thinking. Whichever you choose, make sure your test design, data quality, and business context are solid.

Because both methods have strengths and weaknesses, your choice should depend on:

- The test question you’re trying to answer

- Your team’s statistical expertise

- How quickly you need results

- The consequences of a wrong decision

Whether you're a data scientist optimizing checkout flows, a product manager testing features, or a marketer running campaigns, deciding on the right statistical method means choosing the one that best serves your users and business.

The real win is knowing when to apply each approach to get reliable insights, communicate clearly, and move forward with confidence.

Ready to test smarter?

Whether you choose frequentist, Bayesian, or a mix of both, the key is using the right method at the right time.

With Kameleoon, you get the flexibility, statistical control, and business-ready insights to use both approaches to confidently run trustworthy experiments at scale.

“We needed a way to assess visitor interest more accurately. With manual scoring, lead quality was too low. We chose Kameleoon for its AI, which precisely targets visitors based on their interest in our cars.”

“This campaign shows the multiple benefits of artificial intelligence for personalization. Toyota’s teams have been completely convinced by the performance of Kameleoon’s AI personalization solution and are expanding this approach across the customer journey.”