Experimentation is not one method

Too many teams still treat experimentation like it only works one way.

Run an A/B test. Wait for significance. Then decide.

That mindset is outdated and it is holding companies back.

In the 2025 Experimentation-Led Growth Report by Kameleoon, high-growth companies show us a different path. They have built testing cultures where every team can experiment in ways that fit their goals, constraints, and stage of maturity.

Marketing is no longer shackled to "stat sig or it doesn’t count."

And product is no longer excused from showing results.

The rules are being rewritten.

More and more marketing teams are now choosing the level of rigor that fits the moment.

Product and engineering teams are being increasingly asked to connect their work to real outcomes e.g. revenue, activation, and retention.

As Leah Tharin puts it, product now has to prove its impact on the business.

That is growth. And growth requires learning.

To support that shift, the best teams work across two spectrums: the type of experiment they run, and the level of rigor they apply.

The spectrum of experiment types

1. Progressive delivery (ensure you don’t break something)

Used by: Product, Dev, Engineering

Purpose: De-risk feature releases

How it works: Roll out a new feature to a small group. Monitor critical metrics. Catch major failures early.

Pros:

- Fast feedback on technical and behavioral risk

- No need for large sample sizes or statistical models

Cons:

- No control group or causal inference

- Cannot easily prove performance gains

2. Feature flag observation (see what users actually do)

Used by: Product, UX, Data

Purpose: Learn from live behavior without formal testing

How it works: Use toggles to show or hide features for selected audiences. Track how users engage.

Pros:

- Flexible release and behavioral insight

- Useful for UI or adoption feedback

Cons:

- Not randomized

- Can produce misleading results without careful measurement

3. Personalization with a holdout (optimize for certain segments)

Used by: Product, Growth

Purpose: Deliver targeted experiences and compare impact

How it works: Serve content or offers to segments while holding back a portion for comparison.

Pros:

- Matches real-world use

- Enables segment-level learning

Cons:

- Control group may not be truly comparable

- Hard to generalize to all users

4. A/B testing (Did this change work? And by how much?)

Used by: Growth, Analytics, Marketing, Product

Purpose: Prove impact with high confidence

How it works: Randomly assign users to test and control groups. Use statistical analysis to compare results.

Pros:

- Clear causal insight

- Ideal for business-critical decisions

Cons:

- Slower and more resource-intensive

- Overkill for small or obvious changes

The spectrum of rigor

Not every test requires statistical significance.

Insisting on it in the wrong context can block progress, especially in marketing, where timelines are short and signals are weak.

Here is how leading teams adjust their level of rigor:

Why some teams use the spectrum—and others don’t

Product teams have given themselves permission to experiment with flexible levels of rigor. Frameworks like Itamar Gilad’s Confidence Meter have helped normalize the idea that not every feature needs statistical validation. Small or low-risk changes can move forward based on directional signals, adoption rates, or early telemetry.

Marketers, and most CRO practitioners, on the other hand, are often told they must A/B test. They are held to a higher standard of validation, even when the changes are small and the signal is weak. If their test doesn’t hit 95% confidence, it’s considered invalid. If it’s not statistically rigorous, it’s dismissed. Teams spend hours arguing over complex statistical methods rather than considering the directional trend.

This isn’t about lowering the bar for marketing. It’s about applying the same judgment to all teams: match the rigor to the question. If product gets a spectrum, so should everyone else.

How to put this into practice across teams

The spectrum only matters if teams use it. That means understanding how to apply the right type of test with the right level of rigor, not just in theory but in your actual day-to-day.

If you’re in marketing

You can experiment without always running a full A/B test. Here's how:

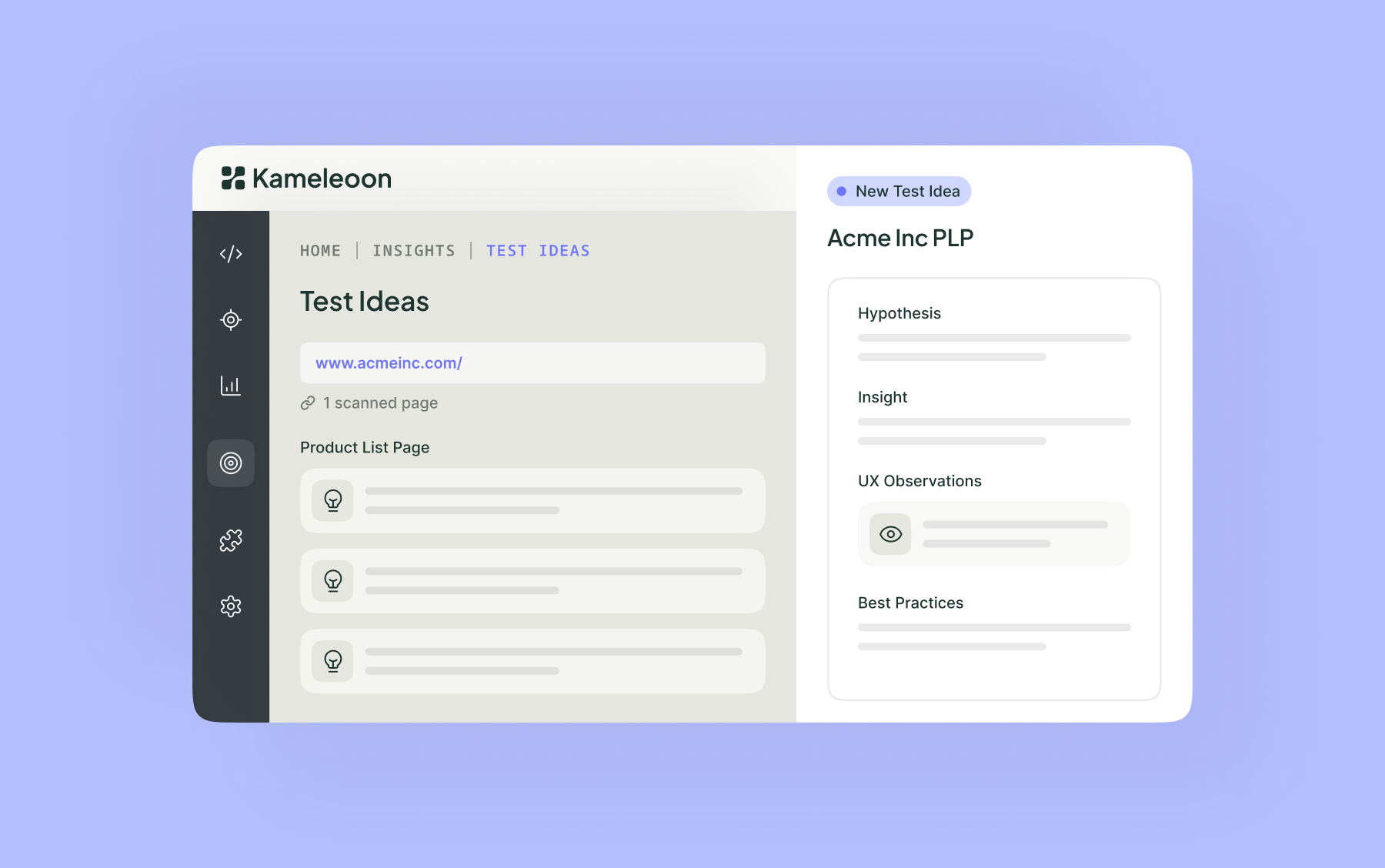

- Use AI tools or visual editors to launch changes on high-traffic pages or segments

- Collaborate with developers when you need to go beyond basic edits

- Compare behavior before and after, or across audience segments

- Track directional metrics like bounce rate, scroll depth, or lead quality

- Set thresholds for success or rollback before launch

- Tie results to KPIs like revenue per visitor or downstream conversion

This is experimentation focused on outcomes, not statistical perfection.

If you’re in product

Don't just ship. Don't experiment with low rigor. Now it is time to show business impact. Here's how:

- Start with a clear KPI such as activation, retention, or monetization

- Use holdouts, cohort tracking, or A/B tests for your biggest bets

- Work with UX and design to validate assumptions before launch

- Do not stop at usage metrics. Connect your work to business outcomes

- Show how your feature changed behavior or supported company goals

Business alignment comes faster when your experiments are measurable.

If you’re working together (congrats!)

Companies with aligned marketing and product teams are more than twice as likely to report significant growth. Go outperform. Here's how:

- Use a single platform to see all product and marketing tests in one view

- Track how experiments in one area affect results in another

- Rely on cross-campaign analysis to interpret overlaps and interaction effects

- Do not pause tests by default. Use data to learn across initiatives

- Build a feedback loop between teams to scale insight, not just output

Growth happens faster when teams learn in sync, not in silos.

Experimentation is not one method.

Testing is not a rigid process only analysts can own.

It is how teams learn and improve, across goals, tools, and timelines.

Sometimes that means full statistical rigor.

Other times it means catching problems fast or observing real-world behavior.

The smartest companies know how to choose the right approach based on what they need to learn.

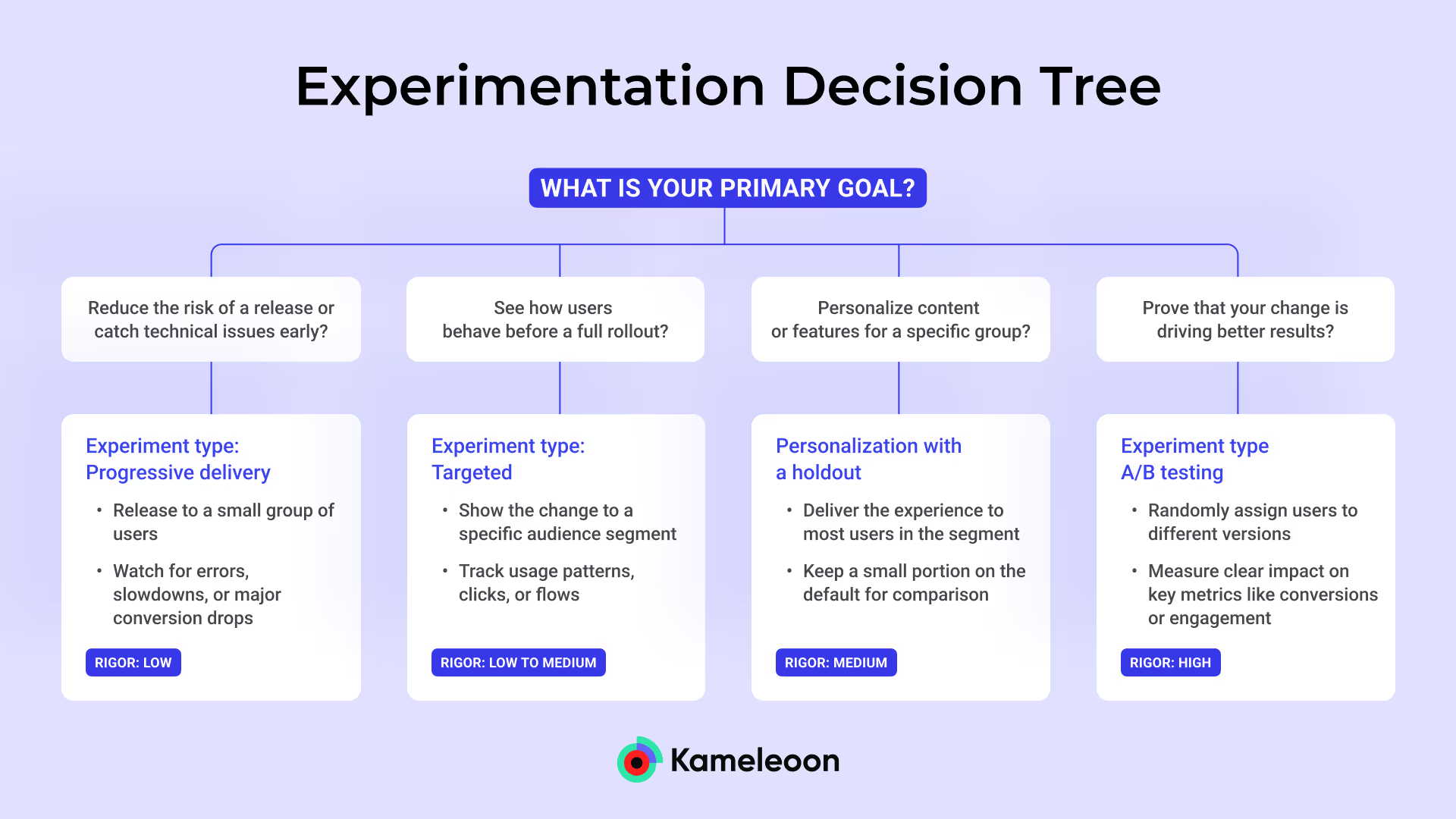

Your goal determines which experiment type to use

To help you adopt how high-performing companies use experimentation, we’ve included a simple guide to match your experimentation goals with the right method. Whether you want to de-risk a release, understand adoption, personalize experiences, or prove impact, this guide can help your team act with confidence, not guesswork.

Experimentation decision tree

What is your primary goal?

Reduce the risk of a release or catch technical issues early?

- Experiment type: Progressive delivery

- Release to a small group of users

- Watch for errors, slowdowns, or major conversion drops

- Rigor: Low

See how users behave before a full rollout?

- Experiment type: Targeted observation

- Show the change to a specific audience segment

- Track usage patterns, clicks, or flows

- Rigor: Low to Medium

Personalize content or features for a specific group?

- Experiment type: Personalization with a holdout

- Deliver the experience to most users in the segment

- Keep a small portion on the default for comparison

- Rigor: Medium

Prove that your change is driving better results?

- Experiment type: A/B testing

- Randomly assign users to different versions

- Measure clear impact on key metrics like conversions or engagement

- Rigor: High

“We needed a way to assess visitor interest more accurately. With manual scoring, lead quality was too low. We chose Kameleoon for its AI, which precisely targets visitors based on their interest in our cars.”

“This campaign shows the multiple benefits of artificial intelligence for personalization. Toyota’s teams have been completely convinced by the performance of Kameleoon’s AI personalization solution and are expanding this approach across the customer journey.”