Fixed horizon A/B testing: what it is and when to use it

At Kameleoon, we see firsthand how fixed horizon testing anchors experimentation programs across leading product-led organizations.

Despite the popularity of adaptive sequential testing methods, fixed horizon testing remains indispensable for high-stakes decision-making, where rigor, trust, and statistical validity are non-negotiable.

In this guide, we’ll walk through what fixed horizon testing is, key considerations, and why it still matters, and how it fits in modern experimentation stacks.

- What is fixed horizon testing?

- Fixed horizon testing in action

- Key considerations when choosing fixed horizon testing

- Layering fixed horizon testing and sequential testing for robust decision-making

- Putting fixed horizon testing to work

What is fixed horizon testing?

Fixed horizon testing is a classical frequentist approach to experimentation in which the sample size or test duration is defined before the experiment starts.

In fixed horizon testing, you set your sample size or duration in advance.

“Fixed horizon” means you commit either to a specific sample size or a specific duration to end your experiment. So reaching your fixed horizon might mean:

- Running until 100,000 users (sample size) see the experiment, or

- Running for 4 weeks (duration), whichever comes first.

By locking in your sample size or duration, you’re aligning with statistical power calculations, ensuring the right chance to detect the minimum detectable effect (MDE), and protecting your program from false confidence.

Most enterprise-grade experimentation programs prefer fixed sample sizes because they align more directly with statistical power calculations. Sometimes, fixed duration is also used when traffic is less predictable or seasonal patterns matter.

There are two key components to a fixed horizon test:

1. You run the test uninterrupted; no peeking for decision-making.

Once live, the test runs uninterrupted. There’s no “peeking” at results to make early decisions. Decisions are only made at the end of the intended experiment length, usually through a t- or a z-test. Peeking inflates the false positive rate, making it more likely you’ll see significance in noise which is why this method discourages peeking.

That said, as we’ll discuss shortly, fixed horizon tests are sometimes used as a calibration layer within a broader experimentation program. In these cases, you might compute or monitor early data for directional signals or planning, but crucially, you don’t act or make decisions based on interim peeks. Action is reserved for the final analysis at the fixed horizon.

2. You do your final analysis only at the end.

Again, while you might monitor early data for directional signals or planning, you don’t act on interim findings. The final decision is made only after you reach the preset sample size or duration, ensuring that your readout is based on the complete, fully-powered data set. Unlike sequential tests that adjust as they go, fixed horizon testing runs to a predetermined endpoint: no premature conclusions. The final readout is clean, unbiased, and defensible, exactly what you need when the stakes are high.

Fixed horizon testing in action: an enterprise eCommerce loyalty program rollout

Let’s look at how a major e-commerce brand could use fixed horizon testing to confidently launch a new loyalty program.

Background:

A major fashion retailer is planning to launch a new loyalty program with exclusive perks (free returns, early access to sales, and member-only pricing). The change will be sitewide and high-impact, involving cross-functional stakeholder buy-in from product, branding, marketing, sales, and even CX. The business needs to validate impact on purchases and behavior before scaling it globally.

The hypothesis:

Launching a loyalty program will improve customer lifetime value by increasing repeat purchases and engagement without hurting short-term conversion rates.

But the business needs confidence. Before scaling globally, they want to validate the program’s true impact on purchase behavior through rigorous experimentation.

Now, sequential testing could be tempting here. It allows:

- Early monitoring of uplift

- Faster detection of large effects (though we aren’t expecting large effects here—more on that in a bit)

- Flexible decision-making based on interim data

But in this case, it’s too risky. Here’s why:

- Interim results are prone to noise, especially when expected effect sizes are small.

- The test introduces sitewide messaging changes, which can have complex or delayed downstream effects.

- Stopping early based on interim wins (or losses) risks biasing the result, especially if only short-term metrics like cart conversion are considered.

In short: sequential testing is excellent when you're validating a strong prior or looking for big, immediate signals, but for nuanced, high-stakes product decisions like this one, it leaves too much room for false positives or premature conclusions.

Why fixed horizon testing?

This test is about learning and not just validating quickly. Here’s why fixed horizon is the better fit, viewed through five key lenses:

1. Magnitude of effect

Loyalty programs typically influence behavior subtly in the short term (think slight lifts in AOV or repeat purchase rate). These small effect sizes require a fully powered sample to detect reliably. Stopping early risks missing the signal altogether.

2. Stakes of the decision

This is a high-impact, organization-wide initiative. The rollout affects multiple teams and touchpoints across the customer journey. Decisions made on early data could create costly downstream consequences. Fixed horizon testing ensures that conclusions are sound and defensible.

- Kameleoon is designed for such organization-wide experimentation programs; read more here.

3. Tolerance for bias

Because you only analyze at the end, fixed horizon testing avoids the inflated false positive risk associated with repeated peeking. For an exec-facing initiative like this, where credibility matters, a bias-resistant readout is critical.

4. Operational realities

The business has consistent traffic and a clear four-week window available, perfect conditions for running a fixed-duration test without compromising timelines. It’s straightforward to implement and aligns with planning cycles across teams.

5. Experimentation calibration

Even if earlier pilot or sequential tests offered directional promise, this test acts as the final confirmation layer. It converts early indicators into boardroom-ready insights, helping product and marketing commit with confidence.

The takeaway:

When your goal is to learn with confidence, not just move fast, fixed horizon testing is your best ally. It creates a bias-resistant readout that withstands scrutiny, something sequential testing, with its flexibility and early peeking, isn’t always built for.

For this loyalty program, where the business needs to understand not just if it works, but how well and at what cost, fixed horizon testing turns experimentation into strategic clarity.

Key considerations when choosing fixed horizon testing vs. other approaches

It’s common to think of fixed horizon and sequential testing as an either-or choice.

Big expected effects? Sequential testing to move fast.

Small, incremental effects? Fixed horizon testing for rigor.

But it’s more nuanced. Let’s break down how to truly weigh your options:

1. Consider the magnitude of the expected effect

When building your hypothesis, you typically have a sense of what impact to expect, based on past experiments, customer behavior, or industry benchmarks. This impact is your minimum detectable effect (MDE), the smallest difference you want to measure with confidence.

Minimum Detectable Effect (MDE) is the smallest difference between a variation and the control that a test is designed to reliably detect, given a specific level of statistical confidence and power. In plain terms, it’s the smallest uplift (or drop) in a key metric, like conversion rate, that your experiment must detect for you to confidently say, “This change worked.”

For small, predictable improvements (e.g., 1–2% uplift), fixed horizon testing is more reliable. Early peeking can obscure subtle effects in noise, so a fixed sample size or duration ensures a statistically valid readout at the end.

For larger expected uplifts (5–10% or more), sequential testing lets you detect those bigger effects sooner, allowing early stopping and faster learning.

2. Consider the stakes of the decision

High-stakes, organization-wide changes (like updating a core product experience) often call for disciplined fixed horizon testing. This approach delivers a final, bias-resistant readout that your exec team and compliance stakeholders can trust.

This also applies to downstream or long-term metrics like retention rates or lifetime value. Short-term signals from adaptive testing can be misleading as early gains in engagement don’t necessarily translate to long-term success. Fixed horizon testing ensures you’re basing decisions on the complete, fully matured data set, not just early trends. Also, while fixed horizon testing gives you a bias-resistant readout for near-term outcomes, long-tail effects like retention are typically studied later through cohort analyses (and not by running a 90-day experiment).

In fact, in practice, a long fixed horizon test is usually just a standard fixed horizon test for initial conversions (often spanning a few weeks), followed by separate cohort-based analyses to track 30, 60, or 90-day performance. This layered approach ensures that while you’re acting on near-term insights, you’re also watching for longer-term trends that can inform broader strategic decisions.

For lower-risk or more iterative experiments, in contrast, sequential testing can offer faster insights. But again: if these insights influence broader decisions down the line, you may still need to validate with a fixed horizon “calibration” test to keep your decision-making foundation solid.

3. Consider your tolerance for bias

Fixed horizon testing minimizes bias by design: you analyze once at the end, no peeking, no repeated slicing. It’s ideal in regulated environments, such as healthcare and financial services. Sequential testing, in contrast, allows interim monitoring and is great for fast-moving product cycles. But it demands careful error control (like adjusting p-values with alpha spending functions and more). If you’re not fully set up for that, fixed horizon is the safer path.

4. Consider operational realities

If traffic is highly predictable and you can accurately forecast the sample size needed to detect your MDE, fixed horizon testing is often easier to implement. It’s straightforward: run the test to 100,000 users (or 4 weeks), analyze once, and move forward. Variable traffic or shifting priorities? Sequential testing gives teams the flexibility to pivot or stop early.

5. Experimentation calibration

Many enterprises layer fixed horizon testing as a calibration and compliance baseline. It’s the trusted layer that validates the conclusions of faster, exploratory tests. So while fast-moving product teams might use sequential testing to iterate quickly, fixed horizon tests give those insights the weight needed to drive high-impact decisions.

Layering fixed horizon and sequential testing for robust decision-making

Sequential testing is perfect for fast-moving ideas and iterative learning. Fixed horizon testing, meanwhile, anchors high-stakes decisions, validating key hypotheses, building executive trust, and ensuring data integrity.

Integrating both approaches across different phases of a single program, like early directional testing for feature exploration and then fixed horizon validation before a major rollout, can work great.

For example, your product team might start with sequential testing to quickly spot whether a new feature idea has potential, using small sample sizes and continuous monitoring to pivot fast if early indicators are negative. Once you identify a promising variation consistently showing upside across multiple sequential tests, you switch to fixed horizon testing to confirm the result in a more disciplined way.

This fixed horizon phase isn’t just a formality; it’s what gives you that “finality” with the statistical weight and confidence you need to roll out the change at scale.

This layered approach makes your experimentation program more resilient. Here, you’re letting sequential testing help you move fast and explore, while fixed horizon testing locks down only the most promising, high-impact changes, blending the agility of sequential testing with the rigor and trust of fixed horizon readouts.

Putting fixed horizon testing to work

Knowing when to lean on fixed horizon testing and how to blend it with more adaptive approaches lets you move fast, minimize bias, and build a culture of data-driven experimentation that’s both nimble and disciplined. It’s about making informed, defensible decisions at every stage, responsive to early signals, yet anchored by a final readout you can stand behind.

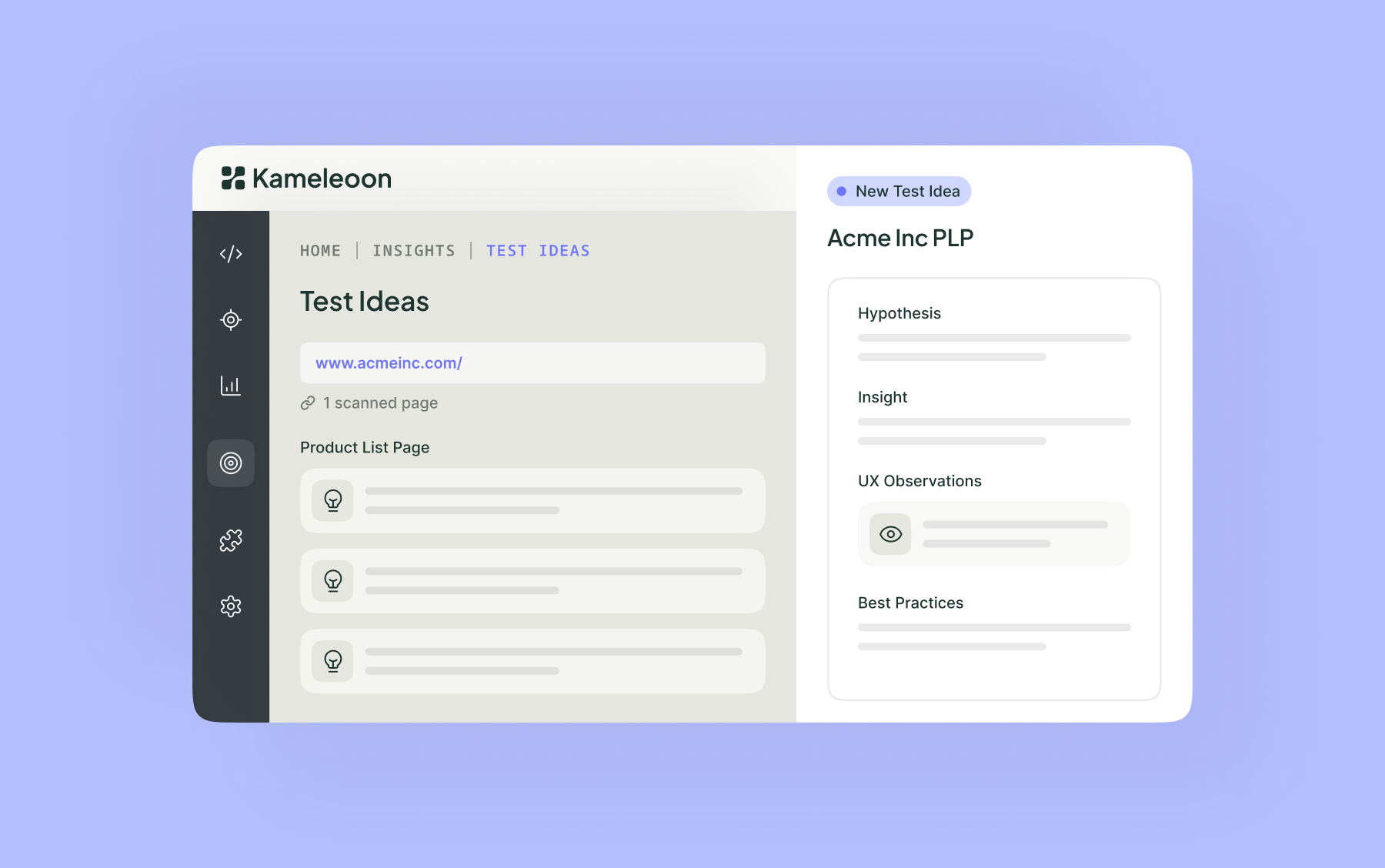

As your experimentation partner, Kameleoon supports every layer of this journey. From fixed horizon tests to sequential analyses and the nuanced workflows that tie them together, our platform covers it all.

Ready to take your experimentation program to the next level? Let’s talk about how Kameleoon can help.

“We needed a way to assess visitor interest more accurately. With manual scoring, lead quality was too low. We chose Kameleoon for its AI, which precisely targets visitors based on their interest in our cars.”

“This campaign shows the multiple benefits of artificial intelligence for personalization. Toyota’s teams have been completely convinced by the performance of Kameleoon’s AI personalization solution and are expanding this approach across the customer journey.”