How to use testing in production for safer, faster releases

Testing in production (or shift-right testing) is one of the most effective ways to de-risk your release cycle. By testing in production, you extend your coverage into the environment that matters most: your production environment.

Production testing lets you catch what traditional testing can’t: edge cases, real-world execution issues, and actual user behavior in live conditions—and can do so gradually, safely, and with the necessary guardrails in place.

In this article, we will explore what testing in production is, the different testing in production approaches you can use, and how teams actually use testing in production.

What is testing in production?

Testing in production means exactly what it says. You test... in production.

Testing in production doesn’t discount or replace any of the testing that happens early in the development lifecycle or across it.

Instead, it extends testing to the production environment, since pre-prod environments, no matter how thoroughly they’re built, aren’t the safety nets they’re assumed to be.

Often they fail to capture the full complexity of production. There will always be factors you can’t account for: live traffic, real data, scale, edge cases, third-party issues, and user behavior.

In other words: because only production has the full context (live users, live data, and real behavioral signals) you take testing right to it.

Testing in production: how it works & key considerations

Testing in production involves a mix of approaches: from silent background runs (like dark launches and shadow testing) and partial rollouts (like canaries or ring deployments) to structured experiments (like A/B or multivariate testing). The method you choose depends on three key factors: risk level, user impact, and business goals.

1. Risk level

Assess the blast radius before anything else.

If you’re shipping a high-risk change, start silent. Shadow testing or dark launches allow you to validate logic and performance under real-world load without any impact on the user experience.

For example, you don't want a new fraud detection model to start blocking real transactions until you’ve confirmed it flags accurately and consistently. Run it behind the scenes on production traffic, compare outcomes to your current system, and only move forward once you’ve seen it behave as intended.

Observability must-haves:

- Structured logging for prediction vs. ground-truth comparison

- Distributed tracing to follow decision latency across services

- Dashboards tuned to detect false positives/negatives over time

2. User impact

If users will experience the change your code introduces, your testing strategy has to reflect that. Backend-only updates can often run silently without issue, but any visual, behavioral, or performance-affecting update needs a different lens. You’re not just validating logic; you’re measuring experience.

You would do controlled A/B testing or a progressive delivery to control exposure for such experiences, observing real user behavior in real-time and fine-tuning based on interaction, not just functional correctness.

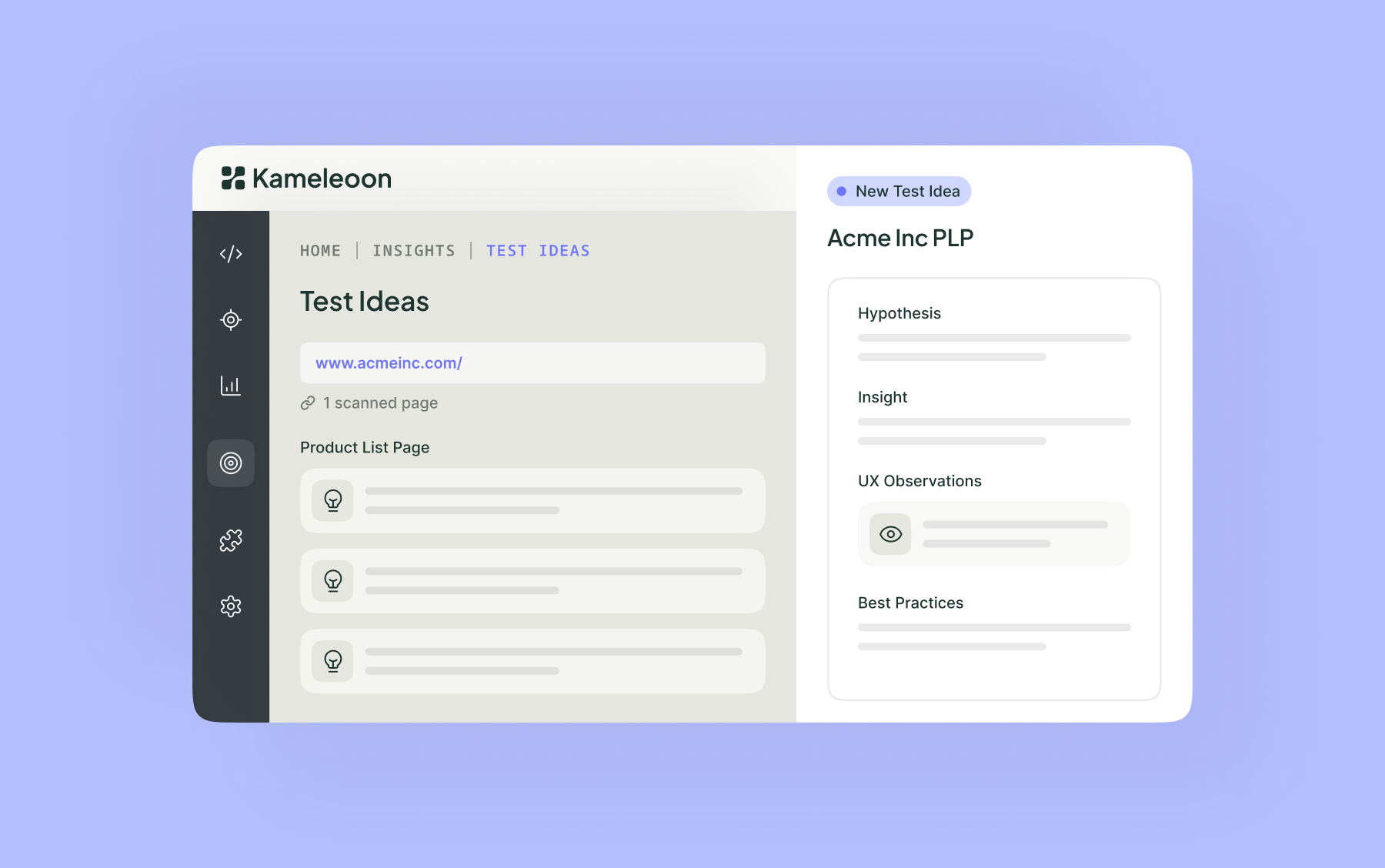

With Kameleoon’s AI Copilot, you can auto-manage exposure based on live performance thresholds.

Observability must-haves:

- Real-time UX analytics (clicks, scrolls, drop-offs)

- Custom events for key interactions (e.g., "checkout button clicked")

- Segment-wise tracking (e.g., new vs. returning users)

- Integrated alerting on conversion rate dips

3. Business goals

The “why” behind your test defines how you run it.

If you're testing for correctness (like billing logic, policy rules, or infrastructure migrations) you lean toward silent or parallel methods.

If you’re looking for engagement, stickiness, or conversion improvements, you need cohort-based strategies.

In these cases, your success isn’t defined by technical stability. Instead, it’s defined by what users do. So you can run an A/B test, measure downstream activation and retention metrics, and decide based on what drives real business outcomes.

Observability must-haves:

- Funnel tracking with cohort breakdowns

- Engagement metrics tied to release versions

- Statistical significance monitoring (e.g., using Kameleoon)

- Goal-oriented dashboards (activation rate, time to value, NPS)

Essentially, you mix and match.

Testing in production isn’t about picking one method. It’s about layering or orchestrating them intelligently. You might shadow test a backend service, dark launch the model that powers it, and then canary the feature flag that surfaces it in the UI to a small user percentage, and all this happens over one coordinated workflow.

Testing in production methods

Dark launching

A dark launch allows you to deploy new code into production and execute it against live data without user exposure. Here, your feature is active in the backend (producing outputs, logging behavior, and generating performance metrics) but hidden behind a feature flag.

A personalization engine, for example, is first deployed in dark mode, running silently in production and processing live data without affecting the user experience. Its predictions are benchmarked against the live model, with key metrics (accuracy, latency, and throughput) tracked closely. Once validated for stability and performance, the feature is progressively rolled out via canary or full release.

Requires: Feature flags, observability, output diffing tools

Shadow testing

In shadow testing, the new code path runs in parallel to production — receiving a mirrored copy of live traffic. It doesn’t impact user-facing systems, but it executes fully, allowing side-by-side validation of results.

For instance, a new pricing algorithm would run in parallel by receiving mirrored production traffic, while users continue to be served by the legacy system. Any discrepancies are logged, analyzed, and resolved, well before the new logic is rolled out to the entire live environment.

Requires: Traffic mirroring, result diffing, and logging

Canary rollout

A canary rollout begins by exposing the new feature to a small percentage (e.g., 1%) of users in production. Over time, as metrics are monitored, the exposure increases.

For example, a redesigned checkout flow may first be released to 1% of users. If engagement and error metrics remain within acceptable thresholds, exposure is gradually increased—5%, 20%, and eventually full rollout. Unlike silent methods, canary rollouts directly expose production users to new code in a controlled manner.

Requires: Traffic segmentation, rollback tooling, live monitoring

Progressive delivery

Progressive delivery automates canary logic, incrementally expanding exposure based on real-time performance data, without manual intervention. In a typical setup, a new service would enter production with rollout gated by specific metrics, such as latency under 300ms and error rates below 0.2%. The rollout advances automatically as long as those thresholds hold.

Requires: Feature flag infrastructure, threshold logic, real-time data monitoring

A/B testing

This testing enables data-driven decisions based on real user behavior in production, minimizing reliance on assumptions and reducing the risk of misaligned investments.

Two feature variants are served to distinct user segments in parallel. Real-time performance metrics such as conversion rate, bounce rate, and retention are tracked to determine which version delivers greater impact.

Unlike other production testing strategies focused on stability, this method is centered on optimization, guiding product decisions with empirical evidence from live environments.

Requires: Experimentation platform, statistical significance monitoring

Blue-green deployment

In a blue-green deployment, you operate two identical production environments. In a live deployment, your green environment receives traffic from internal testers, synthetic users, and potentially a percentage of real users. Once validated, the green environment becomes the live (blue) environment.

Requires: Load balancer support, parallel infrastructure

Ring deployments

Ring deployments introduce features in controlled stages, beginning with internal teams, expanding to early adopters, and ultimately reaching the full user base. Each ring functions as a deliberate checkpoint for feedback, validation, and quality assurance. Feedback from each stage drives iteration and issue resolution before broad deployment. This staged approach enables real-world validation at every step, reducing risk and reinforcing stability ahead of a full-scale release.

Requires: Cohort management, staged release flows

Chaos engineering

This form of testing strengthens confidence that your system can handle real-world stress without compromising availability.

By deliberately injecting controlled failures (such as terminating service nodes or simulating network outages) you validate the system’s resilience under production conditions. The objective is to ensure graceful degradation, automatic recovery, and continuity of service under pressure.

Key metrics like latency, response time, and retry behavior are closely monitored to confirm that the platform responds as designed, maintaining stability and performance in the face of failure.

Requires: Fault injection, observability, mature incident response processes

Feature flags: an enabler in testing in production

Feature flags are a common enabler when testing in production. That’s because feature flags help you push new code to production without immediately exposing it to all your users all at once. With feature flags, you can:

- Run silent tests safely by activating new code paths in production without exposing users to them.

- Target specific user segments (e.g., internal teams, beta users, or geo-specific cohorts).

- Roll out progressively, enabling safe exposure across rings or traffic percentages.

- Instantly roll back if metrics go south, without needing a redeploy

- Toggle behavior at runtime without restarting services.

From a tooling perspective, feature flags work well with:

- Observability platforms, for real-time KPI tracking

- Experimentation solutions, to support A/B testing among other production testing methods

- Config management and CI/CD pipelines, for dynamic rollout governance

In short: feature flags turn production into a controllable lab, where we can test behavior safely, iterate fast, and recover instantly.

Getting started with testing in production

Testing in production is essentially a shift in mindset.

And you don’t have to adopt everything at once. Start by identifying low-risk opportunities where production testing adds the most value: a backend service that can be dark launched, or a feature flag you can toggle for internal users. Build observability into your stack, implement progressive rollout mechanisms, and lean on feature flags to decouple deployment from release.

As your confidence and tooling mature, you'll move from reactive rollbacks to proactive resilience, validating correctness, performance, and business impact with every push.

Whether you’re a product owner validating backend services or a marketer optimizing UI flows, Kameleoon’s unified platform lets you test in production with feature flags, AI monitoring, and shared KPIs—all in one place.

“We needed a way to assess visitor interest more accurately. With manual scoring, lead quality was too low. We chose Kameleoon for its AI, which precisely targets visitors based on their interest in our cars.”

“This campaign shows the multiple benefits of artificial intelligence for personalization. Toyota’s teams have been completely convinced by the performance of Kameleoon’s AI personalization solution and are expanding this approach across the customer journey.”