From A/B tests to vibes: a brief history of CRO

If you’ve worked in product, marketing, or growth lately, you must have at least some familiarity with conversion rate optimization—with CRO.

And if you’ve been in the field long enough, you’ve watched experimentation expand from simple headline swaps to full-fledged, cross-team regimens that span web, app, and back-end experimentation, powered by AI and constantly evolving.

Here’s a quick history of experimentation, from its humble beginnings in early web testing to modern vibe experimentation.

1990-2000s: early days

Today, we think of experimentation as primarily a digital tool, but the practice has roots in direct mail campaigns in the early 1990s, when advertisers running direct mail or physical ad campaigns would A/B test messaging, timing, and layout changes.

As those same groups began to use the Internet to promote their messages, they noticed the same principles applied. They performed what we now think of as “classic” A/B tests: headline changes, copy swaps, and color switches that could be coded easily and tracked manually.

In 2006, Google launched Google Website Optimizer, allowing webmasters to perform redirect tests, and serve as an entry-level tool for the fledgling CRO field and inspire future platforms that are still in use today.

2010-2012: Optimizely, Adobe, and Google Optimize

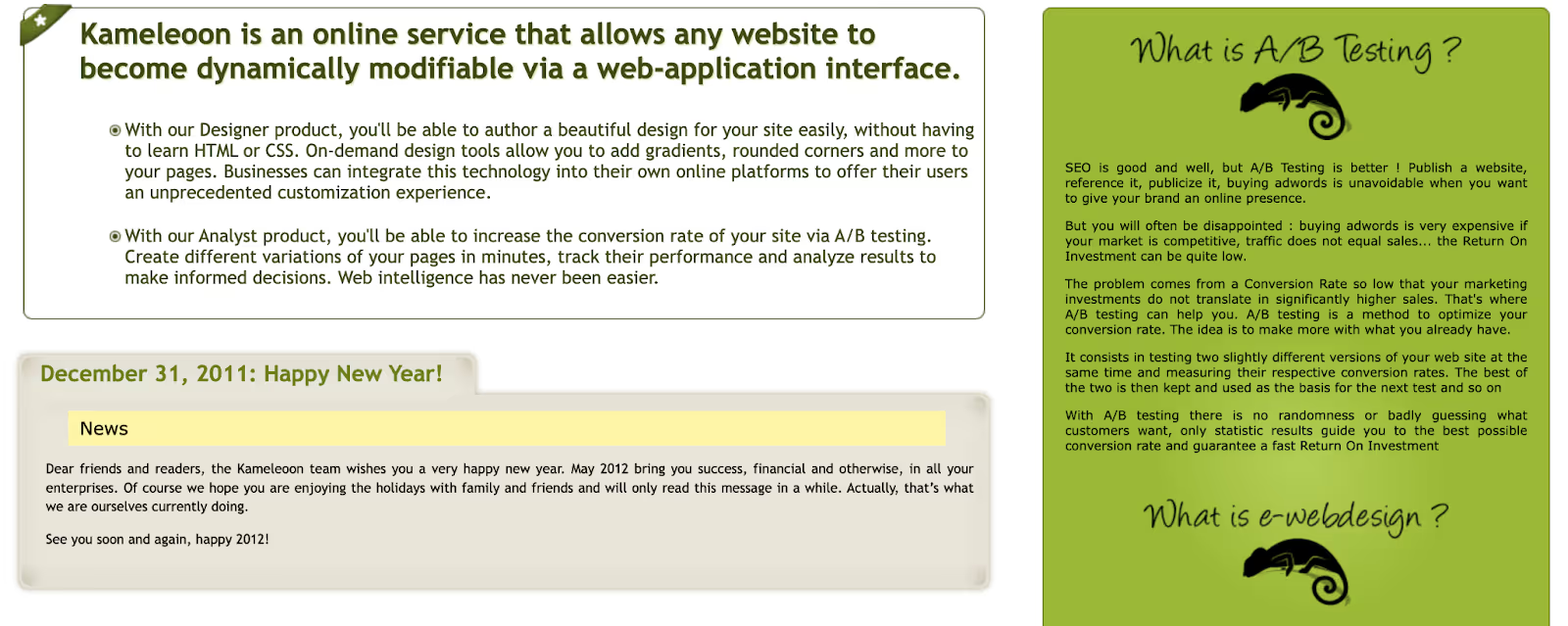

In the early 2010s, testing started to shift. Adobe Target became one of the first A/B testing tools after Adobe acquired Omniture in 2009. Google Website Optimizer relaunched as Google Optimize in mid-2012, and a group of former Google employees launched Optimizely in 2010 to move experimentation further into the hands of marketers and lessen reliance on development sprints to run basic tests.

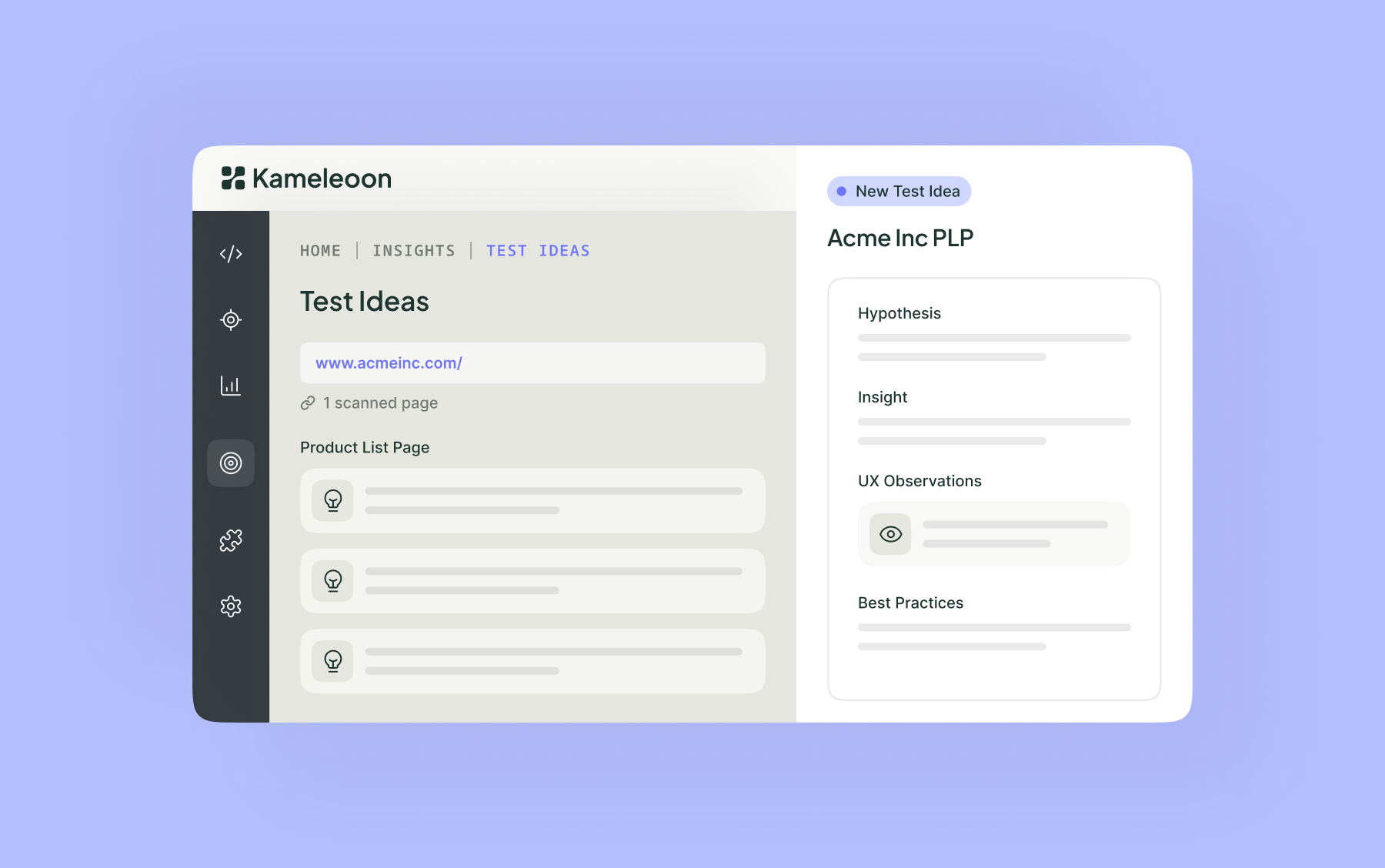

In 2012, Jean-René Boidron and Jean-Noël Rivasseau founded Kameleoon to help teams optimize and personalize their sites. We offered graphic, no-code tools for web and email marketers to run A/B tests with relative separation from engineering teams, furthering the trend of experimentation platforms that worked for non-technical teams.

All of these new and upgraded tools meant that teams could run quick, iterative tests that embodied the spirit of CRO: quick feedback loops that generate user data to inform reliable optimization projects.

2013-2015: Statistics get smarter

As the 2010s progressed, experimentation leaders worked to improve their practices, leading to big changes for the industry. In 2013, Microsoft’s CUPED technique for variance reduction (short for “controlled-experiment using pre-existing data”) allowed teams to detect effects faster and more reliably by adjusting for variability. This also meant achieving statistical significance with smaller samples in less time.

At the same time, other platforms introduced sequential testing and controls to limit type I errors, such as Optimizely’s Stats Engine. These innovations increased the average CRO specialist’s awareness of concepts like peeking, statistical significance, and sample size rules.

2016-2022: The AI wave & machine learning

As early as 2010, researchers were discussing the value of machine learning algorithms for CRO. But it wasn’t until 2016 that machine learning became a dependable part of the experimenter’s toolkit, when Kameleoon released AI Predictive Targeting. By 2017, releases like Stats Accelerator by Optimizely and Auto-Target by Adobe had further spread the use of AI in experimentation.

These releases introduced multi-armed bandits to a broader audience, enabling functionalities that could learn about audiences even as they allocated traffic.

In 2018, Google Optimize, following the trend, rolled out Personalization, combining machine learning algorithms to introduce contextual bandits for personalization. “Bandits” are machine learning algorithms that dynamically shift traffic towards the winning variations in an A/B test.

Until the early 2020s, machine learning largely occurred within the framework of bandits. Then, late in 2022, OpenAI launched ChatGPT, changing the way we think about AI on a global scale.

2023-2025: LLMs, prompted A/B tests, & vibe experimentation

As LLMs surged in popularity in the early 2020s, simple AI chatting turned into vibe coding: building full platforms, apps, and digital experiences with code generated by AI.

Experimentation platforms began applying the idea to their products. In 2025, Kameleoon opened its beta for the first true vibe experimentation tool: Prompt-Based Experimentation (PBX), which uses AI to improve time required to build web experiments by up to 89%.

Tools like PBX give CRO, marketing, product, and data teams more control by reducing the time to build, configure, launch, and analyze client-side A/B tests. With a simple, natural-language prompt, PBX generates variants, sets targeting, and delivers analyses.

2026: the future?

At every step, CRO has become increasingly geared to help more teams experiment faster and more reliably. Vibe experimentation is the latest step in this process.

Agentic AI is also starting to show signs of promise for experimentation leaders in all fields. As agentic AI becomes more and more prevalent in CRO, multiple AI agents will work with experimenters, proactively suggesting hypotheses, targeting suggestions, and layout ideas. The experimenter’s role will be to review and choose experiments, placing heavier emphasis on governance, guardrails, and statistical rigor.

“Today, users can prompt to build a full user experience. Tomorrow, users will be ‘prompted’ with ideas and variations from agentic AI. But at all stages, humans stay in control and choose the ideas they want to test.”

—Frederic de Todaro

CPO, Kameleoon

As agentic AI becomes more and more prevalent in CRO, multiple AI agents will work with experimenters, proactively suggesting hypotheses, targeting suggestions, and layout ideas.

That means the experimenter’s role will be to review and choose experiments, placing heavier emphasis on governance, guardrails, and statistical rigor. With the potential to launch so many more experiments per month, human experimenters will be as invaluable as air traffic controllers, keeping everything on track and prioritizing hypotheses to test.

It also means that unified, agentic platforms like Kameleoon, which offer vibe experimentation, a multi-stat engine, and robust data accuracy guardrails are best-poised to embrace the upcoming agentic age of experimentation.

While AI will continue to disrupt and transform how we experiment, the basics stay the same: collecting data to improve user experiences will remain the heart of a solid CRO program.

“We needed a way to assess visitor interest more accurately. With manual scoring, lead quality was too low. We chose Kameleoon for its AI, which precisely targets visitors based on their interest in our cars.”

“This campaign shows the multiple benefits of artificial intelligence for personalization. Toyota’s teams have been completely convinced by the performance of Kameleoon’s AI personalization solution and are expanding this approach across the customer journey.”